Why ChatGPT Creates Scientific Citations — That Don’t Exist

Read for free here: https://open.substack.com/pub/westreich/p/why-chatgpt-creates-scientific-citations

Welcome to 2025. AI generated content is getting better and better — in online debates, the latest ChatGPT 4.0 algorithm has been scored as consistently more persuasive than real humans.

But persuasive does not equal accurate, and it still makes mistakes. These are often called hallucinations, because the AI confidently states something that… just isn’t true.

One example of this is in the recent White House report, from Robert F. Kennedy Jr.’s Make America Healthy Again (MAHA) Commission.

The report used references that didn’t actually exist.

Or, if a reference did exist, the report got the details of it wrong — a bit like citing Harry Potter, but claiming that it was published in 2024 by famous fantasy author George R. R. Martin.

How could this happen?

It all comes down to citations — and the finicky little distinction between looking correct and actually correct.

What a citation is…

Read for free here: https://open.substack.com/pub/westreich/p/why-chatgpt-creates-scientific-citations

Welcome to 2025. AI generated content is getting better and better — in online debates, the latest ChatGPT 4.0 algorithm has been scored as consistently more persuasive than real humans.

But persuasive does not equal accurate, and it still makes mistakes. These are often called hallucinations, because the AI confidently states something that… just isn’t true.

One example of this is in the recent White House report, from Robert F. Kennedy Jr.’s Make America Healthy Again (MAHA) Commission.

The report used references that didn’t actually exist.

Or, if a reference did exist, the report got the details of it wrong — a bit like citing Harry Potter, but claiming that it was published in 2024 by famous fantasy author George R. R. Martin.

How could this happen?

It all comes down to citations — and the finicky little distinction between looking correct and actually correct.

What a citation is…

Read for free here: https://open.substack.com/pub/westreich/p/why-chatgpt-creates-scientific-citations

Welcome to 2025. AI generated content is getting better and better — in online debates, the latest ChatGPT 4.0 algorithm has been scored as consistently more persuasive than real humans.

But persuasive does not equal accurate, and it still makes mistakes. These are often called hallucinations, because the AI confidently states something that… just isn’t true.

One example of this is in the recent White House report, from Robert F. Kennedy Jr.’s Make America Healthy Again (MAHA) Commission.

The report used references that didn’t actually exist.

Or, if a reference did exist, the report got the details of it wrong — a bit like citing Harry Potter, but claiming that it was published in 2024 by famous fantasy author George R. R. Martin.

How could this happen?

It all comes down to citations — and the finicky little distinction between looking correct and actually correct.

What a citation is…

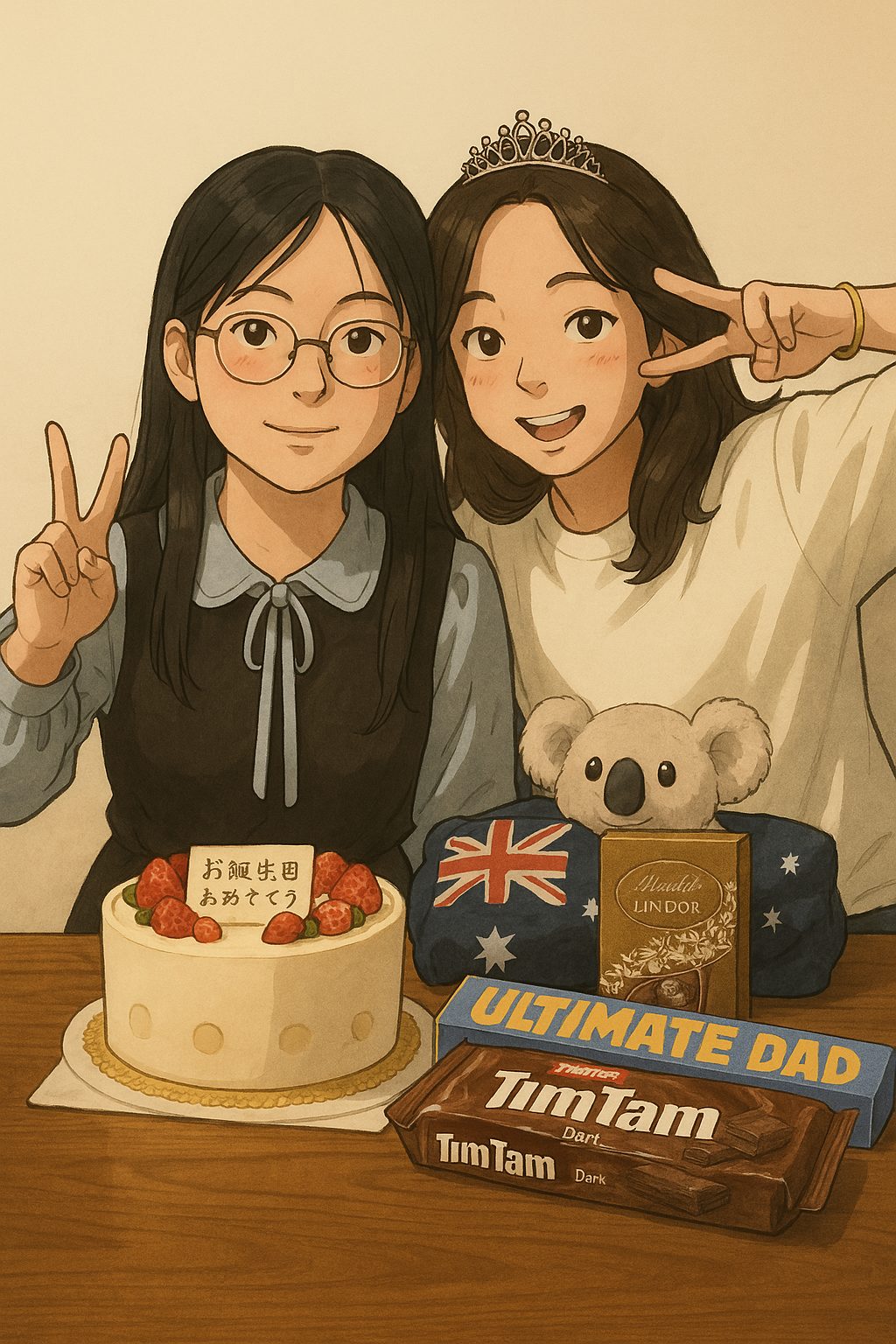

謝謝你的喜歡